Arich Lawson | Good pictures

With most computer programs—even complex ones—you can drill down to find code and memory usage. why That program produces some specific behavior or output. This is generally not true in the field of AI, where the unexplainable neural networks underlying these models, for example, make it difficult even for experts to pinpoint exactly why they often confuse information.

now, New research from anthropology Claude offers a new window into what goes on in the LLM’s “black box.” of the company New paper “Extracting Interpretable Features from a Clad 3 Sonnet” describes a powerful new method to partially explain how a model’s millions of artificial neurons fire to produce surprisingly lifelike answers to common questions.

Opening the hood

When analyzing an LLM, it is trivial to see which specific artificial neurons are activated in response to which specific query. But LLMs do not simply store different words or concepts in a single neuron. Instead, as the Anthropic researchers explain, “Each concept is represented in multiple neurons, and each neuron is involved in representing multiple concepts.”

A system to sort out this one-to-many and many-to-one mess Scattered autoencoders and can be used to perform complex math A “dictionary learning” algorithm across the model. This procedure highlights which groups of neurons are most consistently activated for specific words appearing in various textual stimuli.

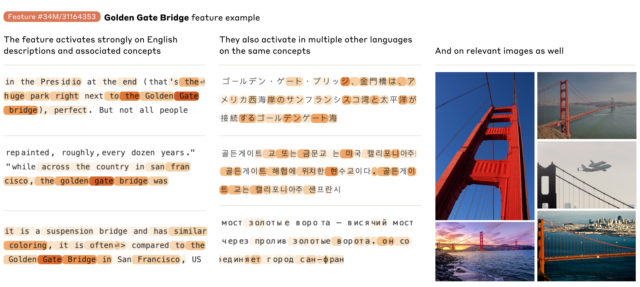

These multidimensional neuron patterns are then called “features” associated with certain words or concepts. These features include anything from simple formal nouns such as Golden Gate Bridge For more concise concepts such as Programming errors Or addition function Representing the same concept in computer code and in multiple languages and modes of communication (eg, text and images).

A October 2023 Anthropological Review This basic process was shown to work on very small, one-layer toy models. The company’s new paper gauges have stepped up to identify the tens of thousands of active features on its mid-size Clad 3.0 Sonata model. The resulting feature map – you can Area study“Creates a rough conceptual map [Claude’s] The researchers write that internal levels are “halfway through its computation” and “reflect the depth, breadth, and abstraction of Sonnet’s advanced capabilities.” At the same time, the researchers caution that this is an “incomplete description of the model’s internal representations.” It may be “orders of magnitude” smaller than a complete mapping of clade 3. .

Even at a surface level, browsing through this feature map helps show how certain key words, phrases, and concepts connect to approximate knowledge. A The feature named “Capitals”, For example, the words “capital” tend to perform strongly, but specific city names such as Riga, Berlin, Azerbaijan, Islamabad, and Montpelier, Vermont are also mentioned to name a few.

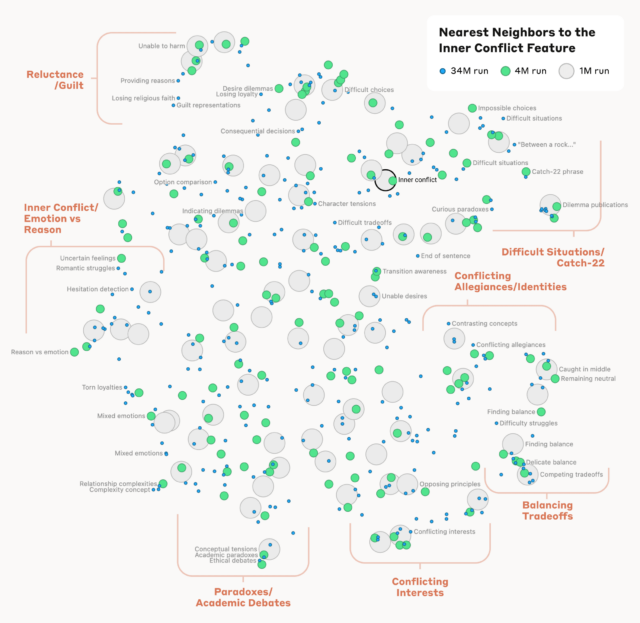

The study also calculates a mathematical measure of “distance” based on the neural similarity of different features. This process results in “feature neighborhoods” that are “often organized into geometrically related clusters that share a semantic relationship,” the researchers write, adding that “the internal organization of concepts in an AI model resembles, at least to some extent, our human. concepts of similarity.” For example, the Golden Gate Bridge feature is relatively “close” to features describing “Alcatraz Island, Girardelli Square, the Golden State Warriors, California Governor Gavin Newsom, the 1906 earthquake, and the San Francisco-set Alfred Hitchcock film.” Vertigo.”

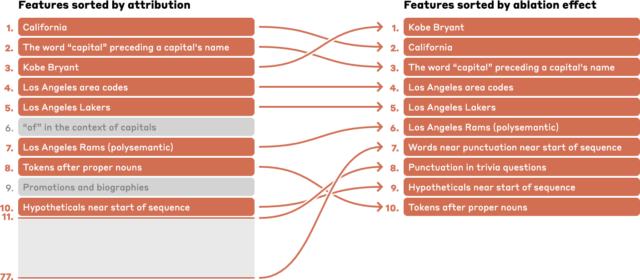

Identifying specific LLM features can help researchers map the chain of inference the model uses to answer complex questions. For example, an announcement about “Capital of the state where Kobe Bryant played basketball” shows activity in a chain of features related to “Kobe Bryant,” “Los Angeles Lakers,” “California,” “Capitals,” and “Sacramento.” ,” to name a few calculated to have a greater effect on results.